For the data visualization community all around the world, the last week of October was marked by IEEE VIS 2021. This was the second year that the conference on Visualization & Visual Analytics took place virtually, with some New Orleans vibes through themed Discord channels and Gather Town. Over 3,047 people registered for the conference. About 2,000 were new attendees, and more countries were newly represented at VIS. Although less than last year’s, the attendance was more than double the highest record (1,250 attendees) from 2018 when the conference was in-person.

IEEE VIS ran six days with about eight hours of content daily. With the different tracks running in parallel, it was easy to miss many talks and sessions that we would have loved to attend. Fortunately, it is possible to catch up from the recordings, but that is also a lot of great content to take in and it can be hard to know where to start.

In this article, we share a few highlights covering various categories of content from the conference.

Noëlle comments on Vis For Social Good, VizSec, Evaluation of Complex Interactive Systems (meetup), and Accessible Visualization and Natural Language.

Matt comments on Happy Surprises in Visualization Design, Multimodal Manifestations of Data, Novel Visual Encoding Combinations, Tools for Visual Communication with Data, and Reflecting on Research and Practice.

Yiren comments on presentations from Vis X AI, Interaction, and Immersive environments.

Noelle’s highlights

This is my second virtual VIS, and second year as a Student Volunteer (SV). And like last year, the huge amount of interesting content at VIS can be overwhelming. Fortunately, between the networking, meetups, and SV duties, I could attend a few subsets of sessions and activities. Here are my highlights:

Visualization for Social Good

The Visualization for Social Good (Vis4Good) workshop aims “to provide a central venue for work that critiques, defines, or explores the impact of data visualization on society.” This year, Vis4Good kicked off with a keynote about the “Do No Harm Guide – Applying Racial Equity Awareness in Data Visualization” by Jon Schwabish and Alice Feng from Urban Institute. The Do No Harm Guide brings the data visualization community’s attention to the variety of groups, identities, and demographics of the people we are focusing on or communicating with. Check out the keynote video to learn how we, the data visualization community, can contribute to ongoing discussions about racial equity.

Vis4Good ran the whole Monday morning with other notable works on the importance of data visualization in ongoing pandemic-related discussions, and works showing the measurable impact of data visualization on the quality of life in local communities.

Visualization for Cybersecurity

This year, a total of ten papers were accepted at VizSec, which is double the number from last year. The presented research covered topics in threat detection, computer forensics, software vulnerability analysis, machine learning, and privacy.

It is interesting to see how the focus of research related to data visualization for cybersecurity has evolved along with the cybersecurity domain itself. The keynote presentation highlighted the lessons learned, progress from the 17 years of VizSec, and especially how many of the different tools and techniques developed by VizSec researchers are finding their application within different industries. Examples of these applications include the BUCEPHALUS tool from the AWARE Sapienza lab, which uses organizations’ vulnerabilities report to help reduce cyber-exposure, and research by Fabian Böhm et al., which is used by experts analysts for conducting live digital forensics investigations.

A second highlight from this year’s VIzSec is the best paper by Robert Gove et al. on using summarization techniques to reduce narrative sizes in incident logs and reports. This work shows how information in incident reports, like tabular log data, can be transformed into a useful format such as dynamic graphs, then further summarized, while keeping consistency and achieving succinctness.

Meetup: Evaluating Complex Interactive Systems

Unlike regular sessions, meetups at VIS are centered around one specific topic, a discussion of on-going projects by the moderators. Meetups are opportunities for the attendees to exchange their methods for addressing open questions in a field.

One of Wednesday’s meetups was about Evaluating Complex Interactive Visualizations, organized by Carolina Nobre, Alexander Lex, and Lane Harrison. It started off with a presentation by Carolina about their recent work, followed by attendees sharing their approaches to evaluating complex or multivariate visualizations.

Questions that were discussed during this one-hour meeting also included: how to train novice users to use complex data visualizations, what type of experiments should be designed to train them, and what kind of interaction data should be captured to evaluate complex data visualizations.

Meetup: VisBuddies

VisBuddies brings together new and returning VIS attendees with similar interests. Newcomers are paired with experienced researchers in the field, and returning attendees can also meet members joining VIS for the first time.

This year, VisBuddies took place Tuesday on Zoom and about 150 registered participants met in groups with their buddies in breakout rooms. The highlight of this year’s meetup wass that the virtual format brought new attendees from different regions of the world who perhaps wouldn’t have been able to join otherwise.

VisBuddies is one of my favorite activities at the conference. If you are planning to attend VIS in the future, I recommend signing up for the VIS Buddies meetup to enjoy the sense of community through new connections and even start new collaborations.

Accessible Visualization and Natural Language

This was one of the sessions I was very much looking forward to. As someone interested in the combination of data visualization and natural language, I was happy to look at the challenges to make data visualizations more accessible from different perspectives and in different applications as well.

First, the work by Alan Lundgard et al. analyzed textual descriptions of visualizations such as captions and alternative texts and derived four levels of the semantic content that these descriptions convey. The highlight of their talk was that the mixed-method evaluation showed that authors of chart descriptions are almost always sighted, but what sighted authors believe is useful in chart descriptions differs from what is actually useful to users who are blind.

Crescentia Jung et al.‘s work, “Communicating Visualizations without Visuals: Investigation of Visualization Alternative Text for People with Visual Impairments” is also about descriptive texts. This three-phase study surveyed the current practices regarding the use of alternative texts in academic research papers in 2019 and 2020 of the VIS & TVCG, ASSETS, and CHI collections and found that only 40 percent of the figures contained alternative texts. They observed that some of these texts even use structures that do not provide any useful information for people who are blind. Their semi-structured interviews with 22 participants with visual impairment has surfaced the participants’ motivation to understand a visualization, their mental mode of the visualization, and most importantly, new information and style needs for future alternative texts.

My third highlight from this session is the presentation about “Words of Estimative Correlation: Studying Verbalizations of Scatterplots.” In this work, Rafael Henkin and Cagatay Turkay use crowdsourced experiments and NLP pipelines to collect and categorize utterances from participants, and understand the different ways they verbalize correlation seen through scatterplots. From one of the experiments in which participants verbally describe the relationship between two variables in a scatterplot, the findings comprise two categories: the group of five Concepts (description of elements of the data and plots), the group of five Traits (words qualifying and quantifying the concepts). Overall, the study not only showcases the different combinations of concepts and traits that people use to talk correlation in scatterplots about data visualizations, but also opens interesting research questions around the general use of verbalization with data visualizations.

Student Volunteers Party

This is not really a session, but as a proud student volunteer myself, I feel compelled to mention the hard work that the student volunteers put in to help run the conference smoothly. SVs are PhD and Masters students from colleges and universities around the world. For this second virtual VIS, SVs worked an average of fifteen hours as tech support or session moderators. We are the ones behind the countdowns before going live, or the copy-paste of unanswered Slido questions on Discord. We are grateful for every participant’s patience and collaboration, for our SV chairs (Nicole Sultanum, Beatrice Gobbo, Bon Adriel Aseniero, Juan Trelles), and the captains who kept everything neat, organized, and enjoyable. And most importantly, our hats off to the Tech Chairs whom we thought were machines, but they’re actually hard-working beautiful humans.

Matt’s highlights

IEEE VIS 2021 was my tenth VIS conference. Like last year, I was missing opportunities to connect with colleagues in person, though at least I was fortunate to attend a satellite event at the University of Washington (video), which had its own Pacific-time program of lightning talks and panel discussions.

There is always plenty to see at VIS, and like my co-authors, I have only seen a small subset of the 151 paper presentations and the content presented in the conference’s associated events. My personal highlights, beyond the work that I presented (written about here and here), align with my interests in visualization as a creative medium for communication, so my coverage is skewed toward a few thematically-related paper sessions and two associated events: the VIS Arts program (visap.net) and the Visualization for Communication workshop (viscomm.io).

Happy Surprises in Visualization Design

Whenever a visualization project introduces a unique visual encoding or incorporates a new visual metaphor, I love hearing about the process that led to the final design. Fernanda Viégas and Martin Wattenberg’s capstone address delivered at the end of the conference told several of these process stories, focusing particularly on what they described as happy surprises in visualization design: the emergent or unexpected visual phenomena resulting from counterintuitive design choices or oversights in the implementation (“bugs might be features”), revealing aspects of the data with a novel and memorable visual structure. One viewer’s response to one of their projects perfectly captures this phenomena: “[the visualizations] look like art and make you look twice, then all of a sudden you are reading, thinking, feeling good, learning.” Of course, to achieve responses like this, Fernanda and Martin remind us that, “if you don’t try a lot of things, you’re going to miss stuff.”

The theme of serendipity (or “beautiful mistakes”) in visualization design was also evident in several other projects presented earlier in the week. The DaRT project (presented by Rene Cutura) is one instance of this; admittedly discovered by accident, Cutura and colleagues described how their attempts to reconstruct photographs and artworks using alternative Dimensionality Reduction (DR) techniques resulted both in oddly compelling images as well as new insights into the behavior of the different DR techniques.

Our own Diatoms project similarly aspired to happy surprises in visualization design. We considered the potential of shifting a visualization designer’s agency from making individual visual encoding choices to governing a semi-random encoding channel sampling process, ideally revealing a few promising encoding combinations that would have otherwise been overlooked.

Inspiration for novel visualization design can come from unlikely sources; implementation oversights, unusual input data, and random sampling are just some of the new ways by which we can be inspired to visualize data. However, some of the best ideas come from stepping away from the data; in a discussion of how visualization practitioners overcome design fixation and unlock their creative potential (an interview study led by Chorong Park), we heard how practitioners find inspiration at museums and galleries, from walks in nature, and even from visual associations revealed to them in dreams.

Novel Visual Encoding Combinations

Explore Mindfulness without Deflection https://youtu.be/eQz6AhzjP9A

Decoding / Encoding https://youtu.be/w00sDhgmLvw

GlyphCreator https://youtu.be/kIQT6LjbVOU

Each year at VIS, I find myself on the lookout for novel visual encodings, or new ways of combining encodings. This is particularly evident in the design of glyphs, or compact spatial arrangements of multiple marks where each mark and its visual properties are associated with an attribute of the data. Glyphs often appear in small multiples arrangements, where each glyph corresponds with a particular data point. Like our Diatoms project mentioned above, the GlyphCreator project presented by Lu Ying proposed a new approach to glyph design, one focusing on circular glyphs and a deep learning approach to transfer visual encoding assignments from example glyphs.

While GlyphCreator and Diatoms propose alternative approaches to glyph visualization, I also spotted some unique instances of glyph design in other VIS presentations. Two of which were found in the VIS Arts program: Wang Yifang presented circular glyphs representing properties of the Book of Songs from China’s late Zhou Dynasty, while Song Anqi presented glyphs that represented aspects of Tibetan calligraphy. Other examples of glyph design could be found in a design space of anthropographics presented by Luiz Morais, where each glyph exhibited several visual properties mapped to different attributes of an individual person. I’m particularly captivated by compact combinations of data marks and figurative elements within glyphs, recalling Lydia Byrne’s 2019 critical analysis of this part of the visualization design space.

Multimodal Manifestations of Data

Visualizing Life in the Deep https://youtu.be/0OvUuOxIRHI

Deep Connection https://youtu.be/jlY4lKCuNQQ

Interspecies Umwelten https://youtu.be/H1dbCslT48Y

Between discussions around accessibility in data visualization, a workshop on human-data-interaction, a data sonification workshop, and a conference session dedicated to data physicalization, it is evident that the VIS community is thinking more deliberately about non-visual representations of data. In a VIS Arts Program presentation by Karin von Ompteda, we were introduced to the term data manifestation, which I feel is particularly useful for describing multimodal representations of data. Many of the projects described by VISAP keynote speaker Jer Thorp could be described in this way, such as his light and sound installation of seismic glacier activity in a public Calgary plaza, which you can read more about in his excellent 2021 book Living in Data: A Citizen’s Guide to a Better Information Future.

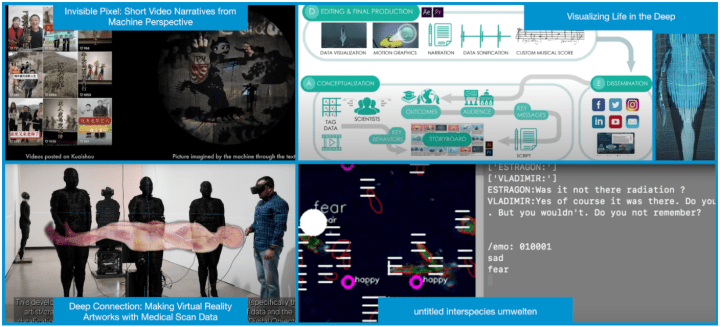

Perhaps the most stunning data manifestation I saw at VIS 2021 was the Life in the Deep project presented by Jessica Kendall-Bar. This project was a spectacular blend of sonification, animation, and visualization concerning the behaviour of marine mammals, with multiple outcomes targeted at scientists and the general public alike.

Another fascinating data manifestation was the Deep Connection project presented by Marilene Oliver. Deep Connection is a virtual reality installation combining medical scan visualization, sonification, and the triggering of music and animation from viewer interaction, namely holding the virtual hand of a patient and stepping into the volume occupied by the patient’s body.

Finally, I’ll highlight two data manifestation projects where the viewing experiences combine visualization with output from generative models: Interspecies Umwelten (presented by Joel Ong) and Invisible Pixel (presented by Junlin Zhu). The former communicates the behavior of microscopic algae both visually and through poetry generated by an instance of GPT2 trained on a corpus of Beckett. The latter visually summarizes a massive corpus of videos dedicated to capturing Chinese rural life not only via abstract visualization, but also via images generated with a text-to-image model, generated from the videos’ transcripts, comments, and text metadata. With a recent and growing interest in generative models and particularly in multimodal applications of GANs, we should expect to see more multimodal data manifestations leveraging these techniques in the coming months and years.

Tools for Visual Communication with Data

Semantic Snapping https://youtu.be/0wf7fuElPp8

Gemini 2 https://youtu.be/NYBWMnsohp8

Kori https://youtu.be/V7sGGWicuSA

While there were no general-purpose interactive visualization authoring tools debuting at this year’s conference along the lines of 2020’s StructGraphics or 2018’s Charticulator, there were several new tools and libraries introduced that address specific aspects of (interactive) visualization authoring.

First, there was Atlas.js (presented by Zhicheng Liu, who had previously led the Data Illustrator visualization authoring tool project). Atlas is a procedural–rather than declarative–grammar for generating charts. It is informed by the lessons learned while implementing interactive chart construction for Data Illustrator, which involved some fairly complicated state management. Liu and colleagues now invite those developing their own interactive chart construction tools to use Atlas as an underlying grammar.

One commonality across the other noteworthy tools appearing at VIS 2021 is their offering of design recommendations, so as to support a human-in-the-loop curation approach to visualization construction. For specifying animated transitions between charts, Gemini2 , presented by Younghoon Kim, recommends intermediate keyframes using the GraphScape framework. These recommendations manifest as Vega-Lite specifications, so conceivably any future interactive authoring tools built using Vega-Lite could also make use of Gemini’s animated transitions. Ideally, use of these keyframe recommendations will make narrative presentations incorporating sequences of statistical graphics easier to follow, whether they are triggered interactively or recorded as data videos or dataGIFs.

While Gemini considered sequences of charts presented over time, there were also sequences of charts arranged in space. Yngve Kristiansen spoke of situations where you have multiple adjacent charts (as arranged in a dashboard or infographic). It is not unusual for there to be redundancies across the charts, or there may be subtle discrepancies in how the data is represented. For example, adjacent charts might share a quantitative measure, but have unaligned axis domains. The same data could be expressed differently in different charts, or conversely, different data could be expressed in confusingly similar ways in different charts. To alleviate issues such as these, Kristiansen demonstrated a “semantic snapping” tool and rule set implemented within the Visception framework (a Vue.js-based visualization construction framework). The semantic snapping tool recommends ways to reduce redundancy and confusion across a set of adjacent charts, such as by harmonizing or merging them when they share data attributes and visual mappings. This research also realizes the design principles identified by Qu and Hullman with regards to keeping multiple views consistent, aligning these principles with Kindlmann and Scheidegger’s algebraic model for visualization, thereby closing the loop between user research, theory, and application development.

The last tool that I’ll highlight here is Kori (presented by Shahid Latif), one that recommends ways of interactively synchronizing charts with narrative text. The Kori prototype is essentially a word processor that allows you to add Vega-Lite chart specifications to the margin. As you write in the text editor, entities and references to the charts are subtly flagged (similar to the visual appearance of grammar and spelling issues in other word processors). However, these highlights invite the author to opt into and adjust bindings that highlight and filter content shown in the adjacent charts. The author can also manually add their own bindings. The result is a reading experience where these highlights and filters can be revealed to the reader as they navigate the document.

Reflecting on Research and Practice

Despite IEEE VIS being a primarily academic affair, I’m always happy to see conversations with practitioners and discussions of their practice at the conference. Many of these conversations happen during the VisInPractice event, an event that I co-organized for the fourth and final time. I would particularly recommend watching a recording of the VisInPractice panel discussing contemporary visualization development ecosystems with Zan Armstrong (Observable), Stephanie Kirmer (project44), Nicolas Kruchten (Plotly), and Krist Wongsuphasawat (Airbnb), moderated by Sean McKenna (Lucid).

Beyond VisInPractice, I also enjoyed the conversation between Jen Christiansen (Scientific American) and Steven Franconeri (Northwestern University) that kicked off the VisComm workshop. Jen mentioned her growing set of visualization design resources that she shares with practitioners. Noticeably under-represented in her list are resources from the IEEE VIS community, which should be a call to action. Researchers should address more of their writing to practitioners (a call to action that was echoed in the VisInPractice panel discussion on writing about visualization). Later in the week, Paul Parsons presented the results of surveys and interviews with practitioners regarding visualization design, and similarly he found that practitioners are largely unaware of much of the theoretical work from the visualization research community. Moreover, Parsons challenged the visualization research community to question the value of prescriptive design recommendations emanating from our studies. Instead, we should avoid seeing visualization in practice as practical demonstrations of scientific findings, but rather as a practice that has its own forms of knowledge production. In other words, we should do a better job of understanding practitioners’ design knowledge before putting our own design guidance out into the ether.

To end on an optimistic note, I was pleased to see a renewed conversation around aesthetics in communicative visualization sparked by Maryan Riahi and Benjamin Watson’s review paper, one which hopefully leads researchers to stop thinking about aesthetics as something that can be objectively measured and compared, or that it is both possible and desirable to make visualize data in an objectively beautiful way. A project that illustrates this conversation is Caitlin Foley and Misha Rabinovich’s Surface Tension project, in which an aesthetic evoking a sense of disgust can serve as a more powerful communicative tool than one evoking an appreciation of beauty. As with the aesthetics conversation, I was similarly pleased to see Derya Akbaba’s refutation of chartjunk: to be clear, this is not a refutation of a (loosely-defined) category of graphical elements, but a refutation of the concept itself. Akbaba argues that the concept is counterproductive, one used to devalue situated and designerly ways of thinking, and one that ultimately is used to discredit design practices that clash with a popular Tufte-esque minimalism. I feel similarly about the negative connotations of terms like ‘embellishment’ or ‘flourish’, and would prefer to shift the conversation toward effective combinations of figurative and non-figurative elements in visualization design, elements working in conjunction to communicate insights to the viewer. Finally, I argue that a dogmatic avoidance of chartjunk inhibits the incorporation of humor and levity in data visualization design; and as the work of Nigel Holmes, Randall Munroe, and Mona Chalabi shows, humor and levity are some of our most powerful communicative tools.

Yiren’s highlights

This was my second VIS conference and also my second year as a student volunteer. I appreciate that VIS was well organized in a remote format during the pandemic. In this highlight, I summarize a couple of the paper sessions, workshops, symposiums, and application spotlights that inspired me most.

VIS X AI

On Oct 25th, the 4th workshop on Visualization for AI explainability was launched online. With the rapidly growing complexity of AI, the need for understanding how these AI models work increased. The data visualization community is using its expertise to bring new insights to AI explainability.

David Ha from Google Brain gave a fantastic talk about using a webpage as a medium for communicating research ideas. He provided several examples of data visualization hosted online. Some of them presented the effects of AI, some of them visualized the AI model, and some of them were interactive web applications where you could tweak the values of the model.

Beyond explainable AI, the advantage of using a webpage persists for communicating research ideas and outcomes. On one hand, interactive applications and animation convey information in a more accessible way compared to images on paper. On the other hand, it takes much less effort than running original code, which may require a complex environment setting.

Here are the selected list of websites mentioned in this talk.

- https://otoro.net/ml/pendulum-esp/ Neural network controller

- https://otoro.net/slimevolley/ Neural slime volleyball

- https://otoro.net/ml/neat-playground/ Backprop NEAT

- https://rednuht.org/genetic_cars_2/ Evolving cars with genetic algorithms and Box2D

- http://eplex.cs.ucf.edu/ecal13/demo/PCA.html Interactive PCA graph

- https://mattya.github.io/chainer-DCGAN/ GNN face generator

- https://affinelayer.com/pixsrv/index.html Interactive Image Translation with pix2pix-tensorflow

- https://distill.pub/2020/growing-ca/ Growing Neural cellular automata

- https://distill.pub/2020/selforg/mnist/ Self classifying MNIST Digits

- https://magenta.tensorflow.org/assets/sketch_rnn_demo/index.html Sketch RNN

Interaction

Interaction in data visualization is one of my favorite research topics. This topic combines expressive graphical representations and effective user interaction. In this session, there were many creative works presented.

Gastner et al. showed their work on evaluating the effectiveness of interactive contiguous area cartograms. A cartogram is not a usual map format and researchers believe it to be less effective at communicating data than a traditional choropleth map. This work added interactive features to cartograms and conducted experiments to demonstrate that the effectiveness of cartograms can be improved by adding animation and other interactive features.

Interacting with data visualization by natural language is an emerging research direction in recent years. Ran Chen presented the innovative grammar – Nebula for interacting with multiple coordinated views via natural language. Nebula users can not only manipulate one data visualization, but they can also interact with multiple linked data visualizations via natural language.

The paper from Yifan Wu et al. is a special one. This paper neither explores innovative interaction methods nor attempts to create interactive data visualization for specific datasets. Instead, they are trying to tackle the problem of distributed data and asynchronous events handling in interactive data visualization. In many complex systems, data is stored in distributed databases, and can only be accessed by a query from the backend due to the large data volume. Developers have to juggle low-level asynchronous database querying while also handling interaction events. They built a middleware named DIEL to address this development challenge.

Immersive Environments, Personal Vis, and Dashboards

Virtual Reality(VR) is not a new field of research, it has lived in research labs for a very long time. However, the new generation VR equipment makes it accessible to public users, and refreshes the interest in this research field. In this paper session, I would like to highlight two papers focused on VR. One focused on fundamental perception questions in the VR environment; another one is a brilliant application of VR.

The work from Kurtis Danyluk et al. compares data visualization in virtual reality and physical visualizations. VR devices make it possible for data visualizations to transcend the limits of 2-D space such as paper and screens. As its name implies, virtual reality is a simulation of the real world. The way we view and interact with data visualization in VR is similar to interacting with physical data visualizations. In this research, they conducted experiments to compare participants’ performance of using VR data visualization and physical data visualization. Though physical data visualization outperformed VR data visualization, they discovered both limitations of current VR devices and new features brought by VR, which are impossible with physical data visualization. They suggest hybrid visualization and interactive features can close the gap between physical and virtual visualizations.

My second highlight is an application leveraging VR and data visualization to help discover extra insight from data. In recent years, tactic analysis is widely used in racket sports. Researchers use data visualization to support expert evaluation of tactics data. However, for tactic analysis, 3-D data visualization can reveal every spatial attribute of tactic data, which provides better insights to discover patterns. TIVEE paired 3-D data visualization with virtual reality devices, allowing users to explore and explain badminton tactics from multiple levels.

The Road Ahead

Virtual IEEE VIS 2021 was another rich experience thanks to the efforts of all committees, organizers, and student volunteers. IEEE VIS 2022 is scheduled to take place in Oklahoma City and we are already looking forward to reconnecting with our community. In the meantime, take advantage of the recorded talks and presentations and catch up on all your missed events online. You can navigate through this year’s schedule here. Be sure to let us know your own highlights!