Have you ever spent days poring over charts and diagrams only to feel no closer to understanding the problem? You’re not alone. Consider the findings from a recent Oracle study titled “How Data Overload Creates Decision Distress,” which surveyed 14,000 people, including employees and business leaders across 17 countries. A staggering 70% of respondents admitted to giving up on decisions because of overwhelming data. The report also underscored the critical importance of decision intelligence for business leaders. A resounding 93% of business leaders believed that having the right decision intelligence can make or break an organization’s success.

So, can we make the data the guiding light it has to be without shining too brightly? Let’s closely analyze how data is transformed into visuals, which is where I believe the real challenge arises. Unlike earlier automated stages, this step depends on human interpretation to turn pixels into actionable knowledge. It’s where noise peaks and misinterpretation risks are highest, from superfluous graphics and irrelevant metrics to data integrity issues. We also need to keep in mind that from the perspective of informational theory, which studies how to transmit signals efficiently, a dashboard is a communication channel.

Channels, in our case, dashboards, have cognitive limits, however. This limit is believed to be around 120 bits per second, the brain’s max speed of conscious processing of information. Although our brain can process up to 11 million bits, we can only consciously process 120 bits by design. The efficiency of our brains allows us to filter and compress millions of data points to only a fraction of the most critical information, which we can process immediately and consciously.

Additionally, our attention span is measured in seconds, and with the demands of multitasking, meetings, random work chat messages, and calls, the time available to process incoming signals from a digital canvas is decreasing. While our attention span used to be about 21 seconds, now it is closer to eight seconds.

As we process the incoming signals, spending our precious seconds and conscious effort, we can hold only 5-7 information items simultaneously in active memory. So even when there is time and appropriate attention to process the signals from data, these signals must come in small doses.

Additionally, noise is amplified when there is a significant mismatch between the sender and user in the domain expertise. Managers are typically subject matter experts while analysts are not. If this mismatch is too great, the dashboard can become inherently noisy, rendering valuable information trapped and ineffective.

Furthermore, the user often must improve their data literacy when it comes to dashboards, learning to navigate dashboards effectively, including utilizing filters and interactive features.

Turning data into effective signals

Dashboard-ready analytics and metrics

First, we need to maximize the informational value per visual. To do that, metrics must have three qualities:

1. Be relative or an index compared to a benchmark

2. Be filtered to the dashboard use case

3. Be set against a comparable benchmark (i.e. plan, budget, and limit).

Be relative or an index compared to a benchmark

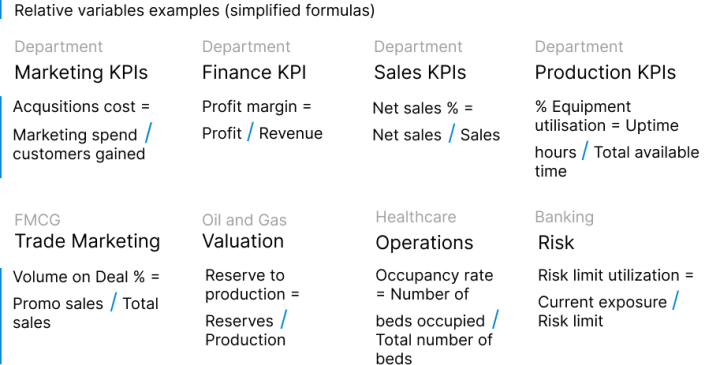

Our understanding of crafting relative metrics for data-driven decisions has advanced significantly in recent decades. Techniques like the balanced scorecard, unit economics, ratios, and contribution analysis share a common thread: they all reveal how one metric relates to another. Consequently, these metrics offer threefold or more informational value for the same user attention time. For instance, a visual showing margin percent proves more insightful to a decision-maker than standalone profit figures. Across various industries, whether ratios, margins, or unit economics, a common pattern emerges—a numerator and a denominator. As these index KPIs evolve, they accumulate even richer insights by tapping into the values of underlying KPIs and their connections to benchmarks.

Be filtered to the dashboard use case

It is not enough to visualize just one base formula. It is better when a range of derivative measures is present for selection; this allows users to choose different aggregation levels and periods without interrupting the analysis flow. Five to 10 derivative formulas should support a single metric. Here, analytics becomes an instrument that maximizes user experience.

Be set against a comparable benchmark

Expected KPI values are essential to the informational value of the whole visual. They provide context even to the person unfamiliar with the subject matter. Benchmarks, plans, or limits usually come from manual analysis done by the managers. In these simple digits, a wealth of research is hidden. A good dashboard must use this wealth to enrich all data with a straightforward method–comparing the current and planned values.

Resulting in last-mile analytics

I call metrics that have these three characteristics last-mile metrics. These metrics are delivered and handed to the decision-maker the same way a postal package is handed to the recipient on the last mile of delivery. Akin to the product’s journey, from the warehouse shelf to the back of the truck to the customer’s doorstep, data insight traveled from the source database to the data warehouse and finally to the dashboard. This last leg of the delivery process is the most critical both in the supply chain and in data analytics.

When metrics have these three qualities, the last mile handover is now likely to be successful.

Data modeling for best filtering and drill-downs

Second, dimension filtering options allow the user to receive more signals. For this, a data model must be in place. Data models dictate the table structures and their relationships. The most valuable data model schema, in my experience, is the star schema. The star schema uses a central fact table surrounded and supported by a range of dimension tables. Usually, each business process should have its own star. As complexity increases, stars can become constellations.

This approach takes more time to prepare and model than just visualizing a pre-filtered queried table. Still, because it gives the user greater control of how and when to drill down, it alleviates data overload significantly. With a digital canvas of visuals built on a star schema model, users can slice and dice the same absolute and relationship metrics across all filters.

As the visuals change, their movement captures the brain’s attention without conscious effort.

This changing canvas also allows us to mitigate the cognitive load problem when we show too many visuals simultaneously. Hence, the user can interact with the channel and request more signs once ready (by choosing filters or pressing buttons). With each interaction, as the canvas becomes a familiar setting of graphs and charts, the variety and volume of visuals can increase gradually without causing a data overload.

Data visualizations maximize the use of the user’s attention.

Third, data visualization principles must play two critical functions per informational theory. First, the visual hierarchy should control which signals (or visuals) will be noticed first and last.

The pre-attentive attributes of color and size best create a visual hierarchy. Research studies have shown that the brain can process visual information, including color, in as little as 13 milliseconds. Visualizations that are larger and with greater color contrast will get the first milliseconds of attention. The visual system will scan for the next largest and contrasting object as it takes in information. More important signals are placed from the top left to bottom right (when users read from left to right).

Why should there be a difference in the order in which we need the information processed? As we know, the capacity of short-term memory is limited. Hence, the signal sender should not show too many visuals with equal importance simultaneously.

The second function of data visualization principles is to minimize noise. From the perspective of informational theory, noise is unwanted variations or disturbances that can corrupt or interfere with the accurate transmission or reception of information. In our communication system, noise has many ways to introduce itself:

- Visual noise: Visual clutter is elements that do not add any informational value or distract from the intended message. It could be excessive use of colors, icons, or graphical elements that do not contribute to conveying the relevant message.

- Data Noise: Data noise is inaccuracies, inconsistencies, or irrelevant data points that can confuse or mislead the user. The sender can introduce this noise even with error-free data if he lacks the domain knowledge to design visuals as signals.

- Interface Noise: Interface noise refers to design or usability issues that hinder the user’s ability to interact with the canvas effectively. This could include confusing layouts, unclear labels, or intuitive navigation, making it difficult for the user to access and interpret the messages.

One of the effective ways to decrease clutter is to use Gestalt principles. There are four principles: Proximity, Similarity, Continuity, and Closure. Their use makes it easier to delete unnecessary lines, group similar items without using pixels to highlight the grouping, and reduce compleх shapes to their essential forms. Less unnecessary clutter means less noise without compromising the intended message. Using Gestalt principles to minimize noise, we use the existing universal encoding mechanism of the mind to eliminate unnecessary pixels, increasing the pixel-to-data ratio.

UX/UI design principles to customize the design to the use cases

One dashboard cannot send all of the messages, and trying to put all the information on a single canvas can overwhelm the user. Collecting interrelated dashboards and reports can solve this problem and provide insights in manageable doses. In essence, by creating a system of dashboards, we are increasing the channel’s capacity to transmit signals to each user. Each canvas communicates fewer signals, but the user can request more signals once his mind processes the first batch. The user can request more signals through interactive elements such as page transitions, buttons, pop-ups, and drill-downs.

We also can tailor each canvas to the use case of the receiver. The channels’ capacity depends on the use case. The user or receiver could be a busy CEO with 5 minutes to get the most important signals. Such use cases require specific visuals highlighting the current state versus the target. Or the user could be a middle manager tasked with looking deeper into the causes of the recent underperformance of an indicator. In this use case, we can use a table with many filters. Another sometimes overlooked channel is the mobile use case. A simple picture of key metric visuals sent regularly to a group chat of executives and managers can do wonders in terms of sending signals as fast as possible.

Conclusion

The data overload highlighted in the Oracle report can be addressed more effectively if we treat dashboards as communication channels. Here’s how:

- Establish a shared knowledge base between the sender and the user.

- Enable interactive dimensional filtering with star data models.

- Transform KPIs into relative forms by comparing them with expected values.

- Implement an organized system of dashboards with user-friendly navigation.

- Utilize pre-attentive attributes to establish a visual hierarchy.

- Reduce visual clutter using Gestalt principles.

Footnotes

[1] Csikszentmihalyi, Mihaly (1990). Flow: The Psychology of Optimal Experience.

This article was edited by Catherine Ramsdell.

Erdni Okonov

Erdni Okonov, CFA, is a rare professional with corporate finance and business intelligence expertise. In both of these fields, he honed his data visualization skills. Starting his corporate finance career in investment banking in London, UK, he continued it in Private Equity, presenting data analysis in the most critical corporate events for decision-makers. When the need arose to monitor portfolio companies, Erdni became enthralled with business intelligence, merging his new BI skillset with his keen understanding of presenting data for executives. He introduced a BI system and executive dashboards into four portfolio companies, greatly enhancing their performance and transparency to the managing team. Today, Erdni is an expert-level BI consultant helping medium and large companies in the USA and Europe. He has helped other PE funds, large CPG producers, healthcare companies, and financial institutions. He lives in Princeton, NJ, with his family, where he enjoys kayaking and running.