Even if you’ve never heard of data scaffolding, it’s likely that you have (or will face!) a data problem of your own for which scaffolding is the answer — or at least part of it. You may have even had a data problem in the past where scaffolding could have helped, but you just weren’t aware of it. Plenty of other folks have written about scaffolding (Phillip Lowe, Carl Allchin, Ken Flerlage), but I’d like to provide some of my own use cases for applying the technique in business settings.

What is data scaffolding and why would you need it?

Data scaffolding is a technique used to fill in pieces of data that are missing from your data source in order to help with analysis and visualization. Merriam-Webster’s online dictionary defines the word scaffold as:

1) a temporary or movable platform for workers (such as bricklayers, painters, or miners) to stand or sit on when working at a height above the floor or ground

2) a supporting framework

Data scaffolding is, quite literally, that platform or framework to facilitate a more thorough analysis of your data. You create and then insert records that you can infer must exist (albeit abstractly) or details that must be true, based on existing records.

Consider this typical scenario: you are asked to help discover trends over time to inform business decisions. However, new rows of data are only written to your data source in order to log changes, specific activities, or updates. While this is an efficient way to store data from a database administrator’s perspective, it presents a challenge for analysts like you. This is an opportunity to use data scaffolding.

What tools can you use for data scaffolding?

There are a number of tools that you can use for data scaffolding. Tableau Prep and Alteryx are two that I have used. Another option is using Excel in conjunction with some Tableau Desktop join calculations, however this may not be practical for large data sets, for situations where the Excel file needs to be refreshed periodically, or for when one of your data sources is from Tableau Server. In the examples below, I use Alteryx for data transformation and Tableau Desktop for visualization and analysis.

What kinds of data could this technique be applied to?

While there are numerous areas of business where scaffolding can be applied, I’ll discuss three areas here:

1) Human Resources (HR) data, specifically employee headcount tracking. Every business wants to know how many people are employed, hired, or terminated on any given day and this is often available as a point-in-time view. But ideally, a business wants to see how this information changes over time. They may also want to see this trend for each department, or by other meaningful categories, to understand what is happening in different areas of the business.

2) Customer headcount data, similar to HR headcount. Customers join and exit an organization on a regular basis and that organization likely wants to know how many customers they have on a given day, as well as how many were gained or lost each day.

3) Sales and inventory data is a little different from people-related data. Products are sold and returned; stock runs down and is replenished. People data is likely to be reported at a daily level (meaning you want to know ‘how many people do we have?’ each day), whereas sales and inventory data could be reported at an hourly, daily, or weekly level, or something else entirely. In addition to reflecting different dimensions of time, data related to a product may belong to multiple hierarchies, something like (but not limited to):

A. Product Detail: product ID> category > broader category

B. Where Product is Sold: product ID > store X > location X

C. Product Pricing

People data may certainly belong to multiple hierarchies as well, but product data will be structured differently to people data. This may require that distinct scaffolding techniques be applied.

Use case example 1 — scaffolding for headcount

The sample data below is intentionally simple. In a real working environment, there would be more nuance to the data structure that you would investigate before attempting any data transformation. But in this example, you have eight customers who have signed up for and then cancelled a service at various points in time. The data source receives new rows of data only when there is an update for that customer:

You have been tasked with showing the headcount trend of customers since this service was first offered. The business also wants to know how many customers signed up or cancelled each day. Losing customers (or employees) is also known in business as attrition or churn.

If you connect to this data as is in Tableau, for example, you can put together something that looks like this:

In the line graph, you can see that there is a sized circle representing the number of records on the dates associated with each record, but you don’t have a circle for any of the days that fall in between the dates in your data. This is because there is no data in your data source that represent those specific days.

You could review these 20 records and work out that Andrew was an active customer from August 4–9, he left on August 10, then came back about two months later on October 22. Similarly, you can infer that Sarah signed up on September 20 and has remained an active customer since then. You could probably comb through this list, manually work it out, and report back to the business as requested. But that would take awhile and you are only looking at eight customers. In the real world you are likely to be looking at far more records representing far more customers, so this method may not be realistic.

It’s time to scaffold. First, insert the dates that are missing (for each customer) and show that customer’s status on each date. Note that this is daily-level data.

Let’s go step by step:

1) Find the earliest date in the data by sorting in ascending order by date. In this case, it’s August 4, 2020. In a real world application of scaffolding, you may need to do some internal research to ensure you have all the right data and are interpreting it correctly before you start.

2) Determine all the days that exist between the earliest date in the data and today. In this example, today is February 1, 2021. This means the last date you scaffold will be yesterday, or in this case January 31, 2021. There are 181 days that exist between August 4, 2020 and January 31, 2021.

3) For each row in your original data (20 rows), you need to attach or append 181 days to each row. Pause and think about this. You started with 20 rows, and you’re attaching 181 rows onto each one. That means you will now have 3,620 rows (181 x 20).

4) Now you need to filter the rows you created in step three so that you are looking at only customer records that make sense for your analysis. You want to see each customer’s status each day since they first became a customer. For example, why would you have records for Sarah prior to when she joined on September 20, 2020? Or why would you need to have records for Sabrina prior to December 3, 2020? You don’t need those records and they don’t tell you anything since they did not exist as customers prior to those dates.

Create a formula to flag and filter out rows that don’t make sense. The formula is scaffold date >= date in the original data set. Your goal is to keep the records where the date you’re inserting is either the same as the date in the original data (for a given customer) or later. After you filter those records out, you are left with 1,766 records.

5) Next, you need to quantify the statuses of active and terminated customers (called Active and Terminated) by creating a new column called Status Number using the Formula tool. The formula in this example is pictured below. Ensure the data type of this new column is set to a numeric one.

Once the Status Number column has been created, you can tell Alteryx to sum the Status Number for each date, which now has numeric values of 0, 1, or -1. This will give the net number of customers on each day.

Note that no line was assigned the value of 0 because it’s a small and tidy data set. In the real world, you would need to carefully consider your data structure when working out what this formula should be.

You could stop here at the summary tool if all you needed was the net headcount figure. But, the business asked to also see the number of customers gained and lost each day.

6) So far, for each customer, you have a row that contains every single date since they first became a customer along with their status on that date (either 1 or -1). So the daily status is populated on every row.

To determine the number who joined on a particular date, the customer record must reflect that date and count them as +1 on that date only (not all the dates in between). Similarly, for terminations, you want the customer record to reflect that date and be counted as -1 on that date only (again, not on all the dates in between). On the dates in between (for both + and -), you want to see nothing (0 or null).

You have a column for net (end of step 5), but now you need to create a new column for gains and a new column for losses. Putting them in their own columns allows you to aggregate them separately from each other and from net.

7) To meet this objective, you join the original data (20 rows going into the left of the Join tool) onto where you were at the end of step five (1,766 records), ensuring you join on all the correct fields. Once joined, that status of Active or Terminated will appear on the relevant date only in a new column, which is what you need. The number of records here will not increase because each of the 20 original rows will just be tacked on to the 1,766 records as new columns.

Note that transaction ID is critical to including in the join criteria for cases where the same customer(s) arrives and leaves more than once. The transaction ID ensures the records join up correctly.

The Append Fields tool you used in step three behaves differently than the Join tool. Using Append ‘filled in’ the status on every date (which is what allowed you to calculate the net), whereas using Join here filled in the status only on the date when the activity happened (which is what will allow you to calculate gains or losses on a single date). Similar to what you did for net, you can use a formula to convert Active to a numeric value of 1 and Terminated to -1. But you do this on the new status columns that were joined, so that when the formula sees the null in the new columns, it will populate a 0 for that line (instead of 1 or -1 like it did for net).

8) You are left with 181 records that contain a date from August 4, 2020 through January 31, 2021 and three columns: net, gains, and losses. Recall in step two that you have 181 days in between your earliest date and today. So you have the data prepared sufficiently for time-trend analysis.

Here is what the entire Alteryx workflow looks like:

If you were to copy and paste this transformed data into Tableau, for example, you could create a graph like the one below, which is entirely different from your original, and it is more meaningful, as every single day is accounted for showing the net number of customers for that day:

If you hover over any date in the above, you can see the net, gains, and losses for that specific day — here are three examples below:

Use case example 2 — sales and inventory scaffolding

This example is quite detailed, so I want to instead write about where scaffolding comes into play that you can apply to a similar situation.

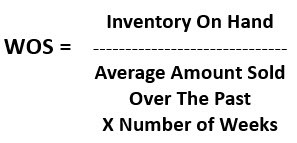

You have been asked to calculate a common key performance indicator (KPI) used in inventory analysis — referred to as weeks of stock (also known as weeks of supply or WOS) — for each of your products and store locations. You need to be able to show WOS at various levels in the business (category, store, location) over time.

In this case, our numerator (inventory data) is stored separately to our denominator (sales data) and both are reported on a weekly basis.

Each of these sources receives new rows of data only when there is a change. This means that:

- some weeks the sales source gets new rows, but inventory doesn’t

- some weeks the inventory source gets new rows, but sales doesn’t

- some weeks there is no change to either sales or inventory, so neither source gets new rows

Pause a moment to think about the WOS equation. Notice that the denominator needs the average amount sold over the past X number of weeks. That’s important because those weeks need to be consecutive to calculate an accurate average, which means you can’t have gaps in the data for the weeks when nothing changed.

Let’s get to work.

First, join the two sources. This is fairly straightforward in this case as they have similar naming conventions and structure. This will expose some gaps in the data. The gaps come from the three bullet points above. For bullet one, this inventory data can be completed by filling in zeros. For bullet two, this sales data can be completed by filling in zeros. But for bullet three, you need to do three things:

1) You must first insert the weeks that are not present in the data at all (this is similar to the first headcount example) for all product combinations. These are your dummy rows.

2) These dummy rows have no product, store, or location information because you have created these rows from scratch. These details will eventually need to be populated.

3) The values in the dummy rows for both sales and inventory should be filled in with zero, since you know that there was no change or activity for them (which is why they don’t exist in the original data).

The overall idea with scaffolding is to ‘complete’ your data set so that you can work with it accurately and fully.

The image below shows an Alteryx workflow that was built to address this business challenge. The top section shows the two sources being joined and the bottom section is where the gaps are filled in via scaffolding.

The Summary tool generates product detail, store, and location hierarchies (so these can be used to fill in gaps) by grouping relevant items from the joined sales and inventory data, and the Generate Rows tool is used once again to explode out the week-ending dates.

Now you have a complete data set (two independent data sources joined up and scaffolding used to fill in the missing data) that can be viewed in Tableau, for example, and you can perform accurate calculations to visualize WOS-over-time easily.

In the example below, parameters have been created so that the user has control over the number of weeks back they want to look (for the denominator) and they can view the WOS in terms of sales units or sales dollar value. Table calculations were used, which means the weekly data must remain in the view for the calculations to be accurate. However, it would be possible to create something similar using calculated fields instead, now that you have the data structure in order and in a single source.

Data that is refreshed incrementally may not contain all the data points required to support business analysis. Data scaffolding is a technique that allows you to build a framework that ‘completes’ your data set so that it can be analyzed and visualized more thoroughly. I hope the examples I have shared will help you solve similar data problems you might encounter.